Notes about ShipItCon 2023

It’s this time of the year again, and another ShipItCon review is due!

I’ve talked about the previous instances of this conference, which happens in Dublin, roughly once a year (though the pandemic years it did not happened), and I think is a quite interesting conference, especially as it revolves more about the concept of Software Delivery, in a broad sense. That makes for very different talks, from quite technical ones to other focused more on team work or high concepts.

Perhaps because I’m getting older, I appreciate that kind of “high-level” thinking, instead of the usual tech talks diving deep into some core nerdy tech. It’s difficult to do properly, but when it resonates, it does quite heavily.

The conference

The conference itself is run in The Round Room. This is a historical place in Dublin, which actually served as the place for the first Dáil Éirann (Assembly of Ireland) to meet.

The round space and the fact that you sit on round tables gives some “Awards” or “political fundraising” vibes, more than the classical impersonal hotel in most tech conferences. The place is quite full of character, and it allows for the sponsors to be located in the same room, instead on the hall.

There are some breaks to allow people to mingle and chat, but the agenda is quite packed. As well as an after party, which I didn’t attend because I had stuff to do.

As in last year’s, CK (Ntsoaki Phakoe) acted as MC, introducing the different guests and making it flow. It’s unconventional, but it helps to keep momentum and helps making everything more consistent.

The topic for this year was the unknown, and, in particular, the unknown unknowns.

Talks and notes

Some ideas I took from all the different talks

Keynote by Norah Patten

Things started quite high with Dr Patten, which is set to be the first Irish astronaut. She described her path, from her childhood in the West of Ireland, where she was fascinated with space, especially after she visited NASA. She studied aeronautics engineering in Limerick and later in the International Space University in Strasbourg, where she got exposed to international cooperation, key for space exploration.

She got involved into experiments in microgravity and participated in Project Possum, a program to train scientists into microgravity and spaceflight. One of the highlights of the talk was to see videos of her absolutely ecstatic when reaching microgravity in some of the flights, where you can see that she really belongs out of Earths gravity.

Some interesting details she went over were the training on space suit, where the suit is pressurised and it has a lot of noise inside, as well as all her work inspiring other people, both to help experiments to run in microgravity environments, and well as a general role model.

What’s slowing your team by Laura Tacho

Laura talked about the different ways of measuring the performance of teams, and how there are now some metrics in the form of DORA or SPACE. But, she argued, those are related more to known unknowns than to unknowns, following the topic of the conference.

All data is data

that include things like opinions or 1:1 conversations

She studied recently a lot of trams and she found common problems in most cases, in three areas:

- Projects:

- Too much work in progress

- Lack of prioritisation

- Process:

- Slow feedback, both from people (like feedback on PRs) and from machines (like time for the CI pipeline to run)

- Not enough focus time, like time free of interruptions

- People:

- Unclear expectations, which can lead to a loop of micromanaging, as tasks are defined too broad, which leads to not complete the expected work, then correct by micromanaging, and start from there again the loop.

All of the elements of the list are probably not surprising to anyone with some experience. The problem is not that much to recognise these problem than act against them effectively.

To continue with the work, she recommended DevEx. Interestingly, she also recommended the book Accelerate, which was commented in a couple more occasions during the day. It’s a great book and I also recommend it.

Large Language Models limitations by Mihai Criveti

This feel over the more “technical side” of the talks, and, unfortunately, got some tech problems in the presentation. It discussed some of the problems with LLMs and some ways of mitigating them.

The interesting bits that I got there were that LLMs are very very slow (in Mihai’s words, hundreds of times slower than a 56K baud modem from 1995), and expensive. Expensive to operate, as being so slow requires a lot of hardware (around 20K in a cloud provider) for “someone” writing at a very slow pace, as we’ve seen. But prohibitively expensive to train with new data.

An LLM model requires first a training phase, where it learns from a corpus of data. This is insanely expensive. And then an operation phase, where it uses it to answer a prompt. But LLMs doesn’t have memory, they only can generate information from the training. there are some tricks to “fake” having memory, like adding the previous questions and answers to the prompt. But prompt has a small limit, and the whole amount that it can generate is around 7 pages of texts, and it gets weirder the more it has to generate. Which makes difficult to create applications where either it has to understand specific information (as it needs to be trained for that) or it requires a relatively big input or output.

Another interesting note is that LLMs work only with text, so anything that needs to interact with them needs to be transformed into pure text, which makes difficult to work with things like tables on a PDF, for example.

How to make your automation a better team player by Laura Nolan

Laura already gave talks in previous years and they have always been interesting. She discussed some concepts coming back from the 80s in terms of operators (people operating systems, particularly in production environments) and automated systems and how the theory of the JCS (Joint Cognitive Systems) emerged.

This theory is based in the idea that both automated systems and operators cooperate in complex ways and influence each other. For example, having an automated alarm can actually make a human operator to relax and not perform checks in the same way, even if the alarm is low quality.

Looking through that lens, there are two antipatterns that she commented:

- Automation surprise, the idea that automation can produce unexpected results or run amok. Some ideas to avoid these problems are:

- Avoid scattered autonomous processing, like distributed cronjobs (she gave a great advice avoid independent automatic OS updates). Instead, treat them as a proper maintained service.

- Be as predictable as possible

- Clearly display intended actions, including through status pages or logs

- Allow operators to stop, resume or cancel actions to avoid further problems.

- “Clippy for prod” or very specific recommendations and “auto-pilot” for operations.

- “It looks like you want to reconfigure the production cluster, may I suggest you how?”.

- Very specific recommendations can misdirect if they are wrong, so be careful.

- Provide ways of understanding system behaviour is often more useful. For example, “Hard drive full, here are the biggest files” can lead to more insight than “Hard drive full, clean logs? Yes|No”

Finally, she talked about two types of tools under JCS, amplifiers and prosthetics. Amplifiers are tools that allow to do more for the operator, normally in a repeated way. For example, a tool like Fabric, which can deploy into multiple hosts and execute some commands on them. It doesn’t change the nature of the commands, which are still quite understandable. If it fails in one host, but not others, I can probably understand why.

In the other hand, prosthetics are tools that totally replace the abstractions. On one way, they allow to perform things that are totally impossible otherwise. But, at the same time, makes use totally dependent on the underlying automation. Here there are things like the AWS console. What’s going on inside? Who knows. If I try to create 10 EC2 servers and one fails, I can only guess.

Laura suggested building amplifiers when possible and to not hide the complexities. I think is a bit analogue to the “leaky abstractions” problem. At the same time, in my mind I relate it to my way to look at the cloud, where, in general, I prefer to use “understandable” blocks as much as possible (instances, databases, other storage, etc) and build on top of that, over using complex “high level rich services”. I may write at some point a longer post about this.

Granting developer autonomy while establishing secure foundations by Ciaran Carragher

Ciaran gave a talk focused in security, and how it should be looked to the lens of allowing developers their autonomy while establishing secure paths and try to simplify security. Or, better describe, make the easy path secure.

Looking at different perspectives, there are some opposing views. Developers want to have immediate access when needed, to build and test without interrupting their flows, and to be able to perform tasks when they evolve. At the same time, from the point of view of security, there are requirements in terms of minimising risks, follow regulation, and ensure that security is key. So there’s a dilemma in allowing that teams can remain performant and innovative, while the system is secure and, especially, the customer data is protected.

He went to describe several examples on his experience, and some actions taken. Most of that was quite AWS specific, which seemed like his area of expertise. His main recommendations were to find a balance, trying to not bother developers too much provide the means to be secure; and engage with experts (in his case, talked about AWS, but I’m generalising it a bit) as early as possible to be sure to not do silly mistakes. Security is hard!

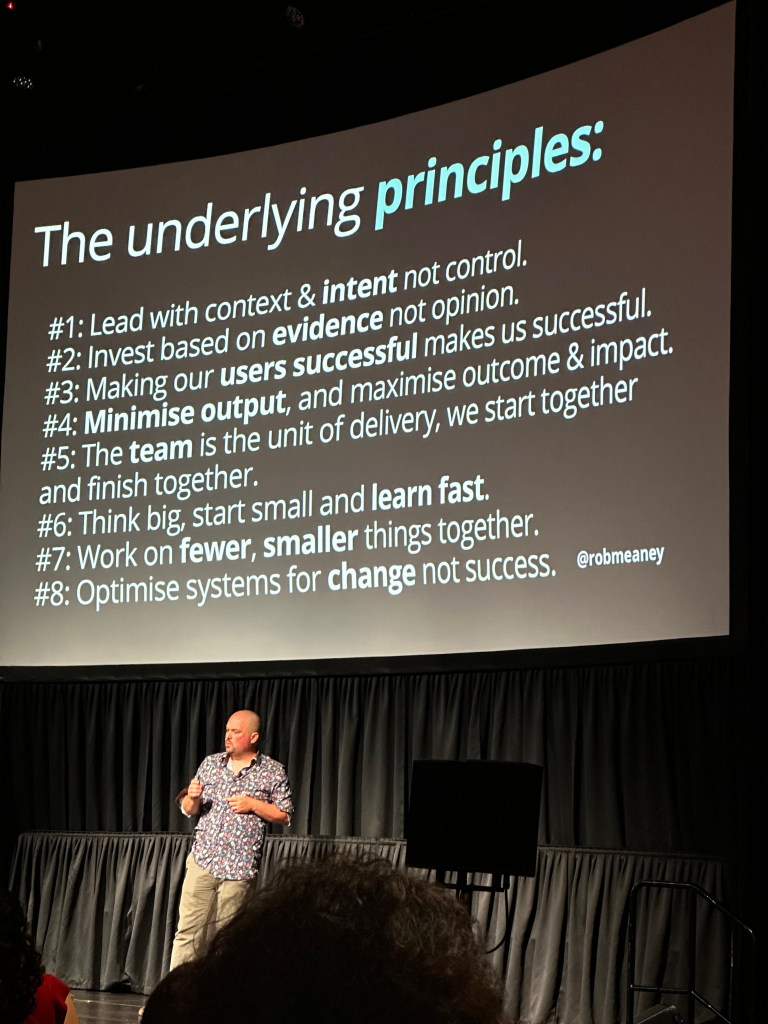

Unpeeling the onion of uncertainty by Rob Meany

Rob described how our developer careers are filled with failures. I think that everyone can relate. Even the most successful software companies release way too many things (products, features) that don’t really get any traction. We are talking that most software release is a disappointment, even in the best of circumstances.

So we need to, first embrace this fallibility and be prepared to not be too attached. And, second, try to fail faster, so we can confront our releases with reality as soon and as often as possible.

We spend too much time building the “wrong” solution in the “right” way

He has this idea of the “onion of uncertainty” which in its outside is more uncertain (more related to the real world and business) and it becomes more technical and manageable as we get inside. So a new feature will need to start out, go inside, and then get back again out to be confronted. He created a list of principles to try to navigate it

I think it follows quite closely the principles of Agile. But it was very well constructed and thoughtful talk and he gave quite concrete examples. It was one of my favourite talks on the conference.

Just because you didn’t think of it doesn’t make it an edge case by Jamie Danielson

Following on the unknown topic, Jamie talked how there are a lot of events in a production system that you cannot really foresee, and that requires a proper observability structure to be able to address and detect. She followed a real example and gave a few recommendations:

- Instrument your code

- Use feature flags to allow quick enable and disable of new code

- Iterate and improve in fast loops: reproduce, fix, rinse and repeat

Panel discussion moderated by Damien Marshall, with Laura Nolan, João Rosa, Diren Akko and Siún Bodley

The classical panel discussion about the topic of the conference: the unknown. some of my notes:

- Learning from failures

- Importance of documentation

- People as single points of success

- Team’s autonomy is key to reduce risks

- Think outside of your own area

- Imagine before release a very bad thing had happen (“pre-mortem” meeting)

- Importance of instrumentation

- Prepare in advance for stressful days (release, etc), prescale

- Monitor what your users are experiencing, to proper prioritise fixes. If the user doesn’t access it, is it worth fixing?

- Bring observability as part of the dev process

For some strange reason, after all the unknown talk, I got a bit disappointed that Damien Marshall didn’t sing “Into the unknown“. But it’s just me, I guess.

The hidden world of Kubernetes by David Gonzalez

David is a Google Dev Expert at GCP, and he went into the metaphore that Kubernetes is the equivalent of an airport. There can be many kinds of planes, but airports ned to be standarised to accept all kinds of them, and they are incredibly complex structures that streamline the operations of the planes.

Kubernetes is an API as DSL modelling of the concept of the software deployment

If you are embarquing into Kubernetes (and he defended that many companies doesn’t need to, and can work with a simpler orchestration approach), you need to transform your processes to fully embrace, as some of the traditional software practices are opposed.

He set a list of ideas to make it work:

- Fully embrace the platform. That means both Kubernetes and the underlying cloud platform. If you are in AWS (or Azure, or GCP), go “all-in”

- Deliver often and. beready to roll back

- Don’t run Kubernetes if you don’t have the scale or resources

- “Build it and they’ll come” doesn’t work. You’ll need an SRE team that deal with. the platform, where 90% of the work should be automated, and the 10% missing will be plenty to keep everyone occupied

He described that the SRE team is key, and they need. tobuild the platform, but also evangelise about it, help teams with manifests (again, fully embracing all the features that Kubernetes offers). The real value of an SRE team is that is able to scale logarithmically as the rest of the company grows linearly. In non-technical terms this means that a small team can support a pretty big organisation.

Innersource, open collaboration in a complex world by Clare Dillon

Clare talked about the concept of Innersource, which, as she described it is “Open Source within a firewall“, using the concepts and practices of Open Source inside a company.

The main practices are that the code is visible to everyone in the company; there’s a big focus in documentations (like READMEs, roadmaps, etc); to contribute externally from a team is possible and even welcome (including details like enabling mentoring or reviewing the code) and that there’s community engagement.

Right now is gaining quite a lot of traction, thanks to different aspects, like the increase in remote and hybrid work; the war on talent and ability to attract people used to work in Open Source already; and the gains in code reusability and developer productivity.

Automating observability on AWS by Luciano Mammino

Luciano described an observability tool that he has been working on that is aimed at serverless applications in AWS, SLIC watch.

In essence, is a tool that will enhance existing definitions of applications in serverless through Cloud Formation to add observability tools like CloudWatch, X-Ray and other available options. It aims to solve the 80% of the definition work, by giving a good “starting point” that then needs to be tweaked.

It sounded like a great tool for the specific case, and they are working in expanding it to improve it.

Final thoughts

As in previous years, and as you can see by the amount of notes that I take (i can assure you that I have many more in my notebook), I think is a fantastic conference and it’s full of thought-provoking ideas. I may not agree with all of them, but they make me think, which I appreciate.

If you are interested in the difficult art of releasing software and can be around Dublin in September, I definitively recommend attending. It’s an intense day, but full of ideas that will keep your head busy for a while afterwards!

The unavoidable review of Indiana Jones and the Dial of Destiny

New Indiana Jones movie! Amazing!

It seemed that we will never got another one after Last Crusade Kingdom of the Crystal Skull! 15 years already! I’ve always been a great fan of the character, and of course I had to go watch the new adventure.

What’s my opinion? Well… it’s fine. Is it GREAT? I don’t think so. But it’s a fun and enjoyable adventure movie, 100% worth watching. Does it raise to the level of the best instalments of the saga? No, but because those are the best of the best in their genre, and arguable some of the best movies of the last 50 years.

Raiders of the Lost Ark is an absolute masterclass in pace, cinematography and action pieces, and a movie that spawned countless clones with leather-jacketed adventurers in the jungle. Last Crusade is a perfect comedic adventure full of soul with a bulletproof script.

Dial of Destiny is pretty solid, and, in comparison with Kingdom of the Crystal Skull (the universally considered weaker of the first four movies), it’s pretty consistent. It maintains a good level all the time. Kingdom of the Crystal Skull is irregular in comparison. It has great heights, like the motorbike chase scene, and astonishingly ridiculous lows, like the infamous “vine swinging in the jungle” one.

I appreciate Kingdom of the Crystal Skull for trying to update the setting from the 30’s serials to the 50’s B-movies about aliens, with the background of the “red scare”. But it’s a big risk that ultimately doesn’t pay off as well as it should when looking at the series as a whole.

Compared to that, Dial of Destiny fits more traditionally in the “Indy feel”. There’s evil Nazis and a couple of McGuffin artefacts to chase around the world. Though it takes place in the end of the sixties, there’s no effort in updating the movie to include elements of a period-accurate Bond movie.

From here on, we move to SPOILER territories. If you want to keep up to here, the basics are “The movie is fun and worth watching in a movie theatre if you’re a fan of the character, but it’s not going to blow your mind“

Now, on to the spoilers-filled section, with a list of things I liked and things I didn’t like, always in my personal opinion!

Read MoreRevisiting Star Trek Nemesis

I recently rewatched ST: Nemesis, which I went to see at the movies, so around 20 years ago.

(sorry, there will be some spoilers, I’ll assume that you’ve seen the movie)

My impression back then was that it was not great and broke the “even/odd Star Trek movies rule”. This rule states that the odd Star Trek movies are bad and the even ones are good, so after Star Trek Generations (I’m starting at The Next Generation movies to not overcomplicate this: number 7 and therefore bad), it was Star Trek First Contact (number 8 and good), then Star Trek Insurrection (number 9 and bad) and then Star Trek Nemesis, which should be good, but it wasn’t.

The movie back then was not very successful on the box office, and more or less ended the run of Star Trek on cinemas, which had been quite long up to that point, and required a reboot years later. While franchises never truly end, I think we can agree that there was a significant pivot around this era, to the point that never series and movies are sometimes referred as “NuTrek”.

So, I just rewatched it with low expectations.

And I quite like a lot about it!

It’s probably not a “good movie” by any standards, and probably not even a “good Star Trek movie”, and it has a bunch of problems, but I think it has several good points that I actually liked

- First of all, if feels like a “premium TNG episode”, but in the good way. The production designs are in line to the things we have seen. The Romulan Senate, for example, totally belongs to the aesthetic of the series, but obviously the budget of a movie helps in making it bigger and bolder.

- The space battle in particular is quite well done, feeling bigger and better, but following somehow the parameters of “Star Trek space battles” of the era.

- The chemistry between the actors is truly something. One of the high points of TNG was the fact that the whole cast were good friend. They really enjoyed working together, and that shows on screen.

- It’s quite interesting to see a young Tom Hardy! He doesn’t look anything like Patrick Stewart, though…

- There are some truly great illumination. It also differs from the “TV series” look. I liked in particular this scene where they focus on Troi’s eyes (the scene can be watched in YouTube)

Sure it’s a bit cheesy, but I think that these kind of “dramatic illumination” has disappeared, and I find the current photography quite bland in general, so going back to some older movies I get actually quite excited on times where they are overly dramatic. I think it adds a lot of character.

- The ending with Data’s sacrifice felt like a good ending for the character. It is not oversold, but it was done totally on character. I also liked the previous scene where Data jumps from one ship to the other.

- I liked the avoidance of destroying the Enterprise, instead leaving it severely damaged. Destroying the Enterprise in the movies has become a trope on itself, and you can see them here that they were close to cross the line.

At the same time, I also think that it has some problems:

- The script try to do too many things at the same time, and the plot gets confusing because of that. The movie also appears to have been cut quite aggressively. While there are some themes in regards of copies and “what makes each one unique” in the characters of B4 and Shinzon, but I don’t think are well developed. I don’t think there’s anything interesting about the fact that the antagonist is a clone of Picard or it’s used in any meaningful way.

- The “mental invasion of privacy” (to not use worse words) is particularly strange, and appears to be totally out of place in the Star Trek universe.

- The CGI shows its age. It’s not particularly terrible given that it’s a 20 years old film, but it’s curious how old digital effects are so noticeable, and lack the warmth of old practical effects, even when not great.

- Too many actors that are not properly used. In a TNG movie is unavoidable that some of the cast is going to have minimal screen time, like Gates McFadden or LeVar Burton here. There’s simply no space in a motion picture for such a big TV cast. But I think that Ron Perlman and Dina Meyer are massively underused here. The Viceroy character in particular appears to be the equivalent of a James Bond henchman, but lacking something that would make him memorable.

In summary, I enjoyed watching it, and I think that it improved from my memory of it, which is always a nice effect.

Notes about ShipItCon 2022

Well, here we are again. Back to going physically to a place where people are talking for an audience in a structured way. It’s been quite some time.

I’m not going to deny that the feeling was a bit weird and that I got a bit (extra) anxious about being in a place with so many people.

I’ve talked about a previous ShipItCon in this blog. It is one of my favourite conferences that I’ve been attending. There has been not too many editions, this was just the third one, but all of them has been full of interesting talks.

One peculiarity is that, being focus in a nebulous concept (shipping software) more than a particular technology or area, it allows for stretching the knowledge into areas that you are not used to. Sort of thinking of your own box. I tend to get a lot of notes and ideas to think about later.

The conference itself

First of all, let’s start by saying that the conference was impeccably run. It was hosted, as it has been in the previous editions, in The Round Room, which is an historic venue in the centre of Dublin.

As its name implies, it’s a big round room, which have its own vibe and makes it distinctive from other venues.

The whole conference had its own MC, CK (Ntsoaki Phakoe). She introduced all the different speakers and set the flow of the conference. The concept of a general MC for conferences is something that I’ve only seen in this one, but it’s a great one, and CK always does a great job at it.

The main theme of the conference was resilience. Being this a word we talked a lot in the past few years, it seemed like a good general topic to go for this ShipItCon 2022, so there were a lot of ideas about how to make software, and the release of it, more resilient, as we will see. I liked that, being the conference the way it is, it also included not only technical ideas about it, but ones related to people.

Talks and notes

Some ideas that I took on the different talks.

Keynote by Cian Ó Maidín.

Cian talked about his company, Near Form, and how a business based on County Waterford was strongly hit initially on COVID, but then later went out to develop an open source COVID tracker that was used by multiple health authorities across the world.

He talked about a lot of the different challenges on working with health organisations at that critical time, under a huge pressure and the urge to help without thinking on profit when the occasion arises.

Failure: A building block, by Nicole Imerson

Nicole talked about her podcast Failurology, which describes different failures and presents how stye came to be, and what lessons we can learn from them.

The most uncomfortable the failure, the more we need to talk about it.

She categorised failures into three kinds:

- New territory. The edges of technology. It’s very difficult to foresee the problems, as new ground is being covered. She used the installation of the first transatlantic cable in the XIX century as an example.

- Mistakes. Human errors or others. Things that could be avoided, but for some reason, are not. Sometimes, things go wrong. She talked about the 2003 North East blackouts, which affected parts of Canada and the US.

- Deliberate. When the risk is accepted as part of the process. In most cases, this can be driven by profit. I think that, in others, this can be a trade off that can be worth it.

As an example of something that was not, she presented the case of the Boeing 373 MAX and its crashes.

Failure happens by Filipe Freire

In this lightning talk, Filipe talked about some failure histories and presented four principles that are quite interesting:

- Dive deeper. Running an ongoing system is a challenging task that will require to fix a lot of unexpected problems. When focused in the right mindset, that can focus the team, instead of feeling lost of full of embarrassment.

- Scrap! Make everything scriptable, and nothing sacred, so it can be adjusted properly.

- Feedback loops, especially when interacting with customers, to be sure that new products are used and improved for the customers.

- Empathise. Most problems are people problems.

Don’t give up! A tale of a system impossible to scale by Nicola Zaghini

Nicola talked on how the concept of resilience moved from being an obscure term related to wood properties to the concept that we use around the year 2000.

He defined the resilience on the capacity to persist, adjust or transform maintaining the basic identity, and emphasised that a resilient mind is more important than a resilient system, as the first will find a way to make the second.

Stop developing in the dark by Noel King

Noel talked about the lost time due bad code, and how we developers mostly operate in situations with lack of data, which caused frustration.

He talked on how we can use data driven techniques to improve delivering and developing of code by selecting an area of focus, agree on metrics to track that area, set goals, track progress and, finally and very important, celebrate the successes.

Your app has died and that’s OK by Anton Whalley

Anton talked about the status of tooling for errors in production that are specific on production, and in particular, on fatal errors. They can be very difficult to track as production environment is normally undebugeable, logs capture known unknowns and stack traces may not capture the whole situation.

There has been crash analytic tools since a long time ago, but the current tooling is adjusting to new environments, like containers and other distributed systems.

Standardisation & effects in resilience by Christine Trahe

Christine talked on how the standardisation of different process can help not only in technical aspects, like tech debt, duplication and reliability, but also on mental aspects like reducing the cognitive log, the learning curve for projects, and reducing also the possibility of burnout.

She explained several examples of standardisation to reinforce these concepts, from purely technical stuff, like Cloud Formation to others more related to social aspects, like knowledge sharing or incident management.

Panel with Sheeka Patak, Stephanie Sheehan, James Broadhead and Paul Dailly, moderated by Damien Marshall

An interesting talk discussing different aspects of running software services. Some random ideas that I took note about:

- Be conscious of time spent on systems that are being phased out. Perhaps is best to accelerate the phase out than to fix them.

- Prepare plans with actions in case that something needs to be fixed. If a pre-agreed situation happens, then activate the plan. For example, if certain error appears more than three times, then fix it.

- Formalising on-call can lead to people deciding that the compensation is not worth it, and end with a worse system than the non-formal one.

- One of the main aspects of senior people is to act calmly in stress situations and reinforce confidence to less experienced engineers. Also to destress situations and provide external viewpoints

- Culture is very important.

- Failure happens, it needs to be accepted as part of the process.

On-call shouldn’t be a chore, by Hannah Healey and Brian Scanlan

Hannah and Brian described some of the elements of the on-call in Intercom, and how some aspects of on-call can be empowering, like fixing actual problems, or terrible, like the possible disruption of personal live.

Intercom went through an internal rethink of the process to try to decouple on-call from the team membership, review the quality of the alarms, check how many people actually need to be on-call, and, in general, try to make on-call something to be proud off.

They went to move to a volunteer system, where the principles were aligned with the values of the company. as part of that, they included clear support for people on-call by introducing what could be described as “on-call for on-call”. They also put the accent into reviewing and using the time into fixing the problems appearing into on-call, as well as making on-call a celebrated part of the company.

They described that these elements are importantly parts of Intercom, and dependent on their culture, which makes that each road will be different and needs to be optimised for the specific people.

A formula for failure, by Brian Long and Julia Grabos

Brian and Julia described the project of migrating to a new language they created for their application, to address several limitations, in particular, composing JSON with a templating system.

The project took around a year to release, and while it was a success and customers liked it, their alternative plan in hindsight would have been to try to work in smaller increments to release it partially, as much as possible.

Ditch the template, by Laura Nolan

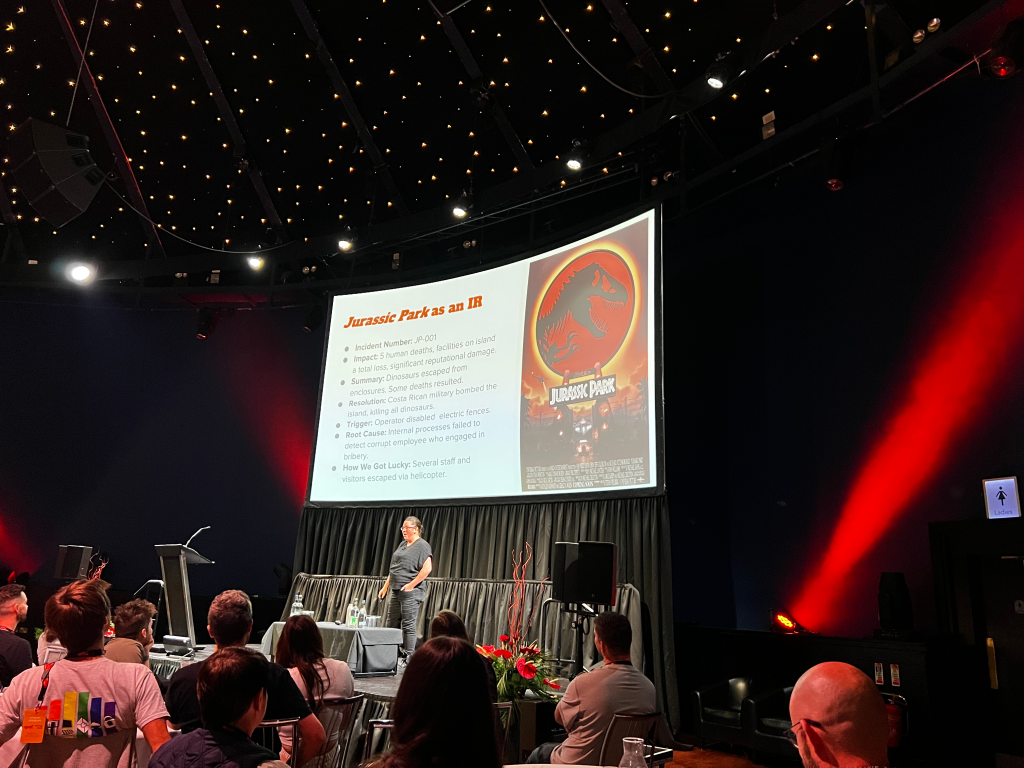

Laura shared her experience writing public incident reports that have generated a lot of attention, and how to make them engaging.

The value of creating these kind of documents, in public, is to share knowledge, creating thoughtful reflection on what actually happened and allowing long term storage and documentation of problems. It also helps being transparent with customers, that can understand the different problems and it generally lifts the industry to a better ground.

She talked that the main value of incident reports is the learning that it produces. And that’s difficult to do just by just routinely filling a form.

Laura described several aspects for engaging incident reports:

- Supporting the reader. It should be without jargon, clear to read and can use links to get deeper into concepts

- Be visual, reinforcing the concepts with graphs, timelines, screenshots or other creative elements.

- Analysis. If the Incident report is a story, the analysis is the moral of it. What should you get from what happened? It also helps to get a feeling of resolution.

- Craft. Elements like simple language, use headings to avoid a wall of text, use a creative title to help searching for it later, consistent tense and avoid converting it into a sales pitch.

Final thoughts

I really enjoyed the conference and I think it was full of great content. I think that they do a great job to select great speakers that show a lot of different aspects of software development. I always learn some things, and seeing what different teams are doing in aspects like on-call, release and monitoring, among other aspects, is always interesting.

I definitely recommend going to the next one if you’re interesting in these aspects! Hopefully it will be earlier than the three years we had to wait for this one.

Interview in the Network Automation Nerds Podcast

I had a nice chat with Eric Chou in his Network Automation Nerds Podcast about some of the topics that I’ve talked about on my books, like Software Architecture and Automation. We also covered topics like how I started my journey into Python or the difference between different roles.

The chat is available in podcast format

#055 Embracing Change in Tech with Ethan Banks and Drew Conry-Murray – Part 2 – Network Automation Nerds Podcast

- #055 Embracing Change in Tech with Ethan Banks and Drew Conry-Murray – Part 2

- #054 Embracing Change in Tech with Ethan Banks and Drew Conry-Murray – Part 1

- #053: Innovation in Network Automation with Damien Garros: The OpsMill Solution – Part 2

- #052: Innovation in Network Automation with Damien Garros: The OpsMill Solution – Part 1

- #051: Unlocking Network Engineering Insights with Dr. Russ White, Part 2

Here for a direct link to the podcast.

and in video

Again, here is a direct link to the video.

I think there was a lot of interesting topics and I hope you enjoy it!

A year in weightlifting

At the end of 2020 I had to take very serious action to lose weight, for medical reasons. The COVID pandemic and being home I guess made my body mass to go over some threshold and the doctor got a pretty scary chat with me.

So I started talking with a nutritionist and start a plan.

The spoilers of that is that it worked. I have lost so far almost 40Kg since I started a year and a half ago. I’m close to what I considered when I started “the ideal situation”, so I’m still working on it.

For the first 6 months, more or less, the main focus was the food. I anyway started walking round 5 km every day, something that I missed since the start of lockdown. I used to walk to work, and I always liked it. Progress was quite noticeable during that time.

After that, the nutritionist told me to start doing strength exercises to increase my muscular mass.

I had never been doing anything like that. I’ve never really been a sports person, and it all has been a novelty. Now that I’ve been pretty actively working out for a year, I’m going to share some of my ideas of the process.

First of all, I’ve been working out mainly at home. I went for a month to a gym, but I quickly preferred to exercise at home instead. It’s faster and more convenient, instead of moving to a different place, and I can integrate it better in my day. It’s true that you have more resources in a gym, but for most of the time you are not using them fully.

Probably because I’m not use to attend, there are a lot of “gym etiquette” stuff that I don’t know. Nothing that cannot be learnt, but I never got to the point of being comfortable. I guess that also at home I’m less worried of the interaction, having to wait to use something, blocking someone for using it or even being judged by others.

In any case, I found out that I like working out with the Apple Fitness + videos. The system itself is well constructed, can be started on different screens, from the phone to a TV using Apple TV. It connects to your watch and gets heartbeat and calories info. I’m already invested into the Apple ecosystem, so it’s natural to use. In regard to the exercises, they just require using some dumbbells and are easy to do at home, not even demanding too much space. The instructors are nice and “human”, meaning that they don’t look like robots, performing mechanically impossible feats.

All exercises are properly explained, including some modifications to make them more accessible.

I had to buy some dumbbells, but it’s a relatively small investment. I got some that can be expanded with extra weight, so I was able to increase the load over time. They are also as small as possible, to save space storing them.

Routine

Simply motivation is not a great way of maintaining the effort long term. In my case, the key element is to create a habit that I can sustain.

This need to not rely too much on motivation, and instead work on repeating things over and over, even if you don’t feel like you should do it.

This includes generating some space and time where you can exercise. For example, I go out for a run after finishing work. Reserve time in your calendar, if necessary.

I never want to do the exercise, and I never end up feeling energised by it, as other people do, but I sort of do it out of duty. I feel good when I finish from the point of view that it is something that I have to do, no matter what. Over time, this becomes more and more natural to do, even if not a single day you are truly wanting it to do. It similar to brush your teeth. It’s not something that you think too much about doing or not, you just do it.

Another key element for me at least is that I’ll do it, but not everyday I have the same energy and that is fine. Somedays I’ll take it a bit easier if that’s the case, but the counterpart is that when I’m feeling like I can do it, I will go fully. And the interesting part is that there are more good days than bad days. The idea is to constantly challenge yourself, to the point that sometimes it gets a bit scary about whether you’ll be capable of doing it.

Part of my habits is weighting myself every day. I don’t recommend it, as it can go up and down for not clear reasons, and sometimes can be demotivating. That said, I like the daily data and to me is easier to do that instead of doing a weekly weight, which is probably best for most people. It is important to do it at the same time and in the same conditions. After a while, it provides valuable trends.

Ah, the wonders of getting feedback…

I think that after all this time, I have the habit quite solidified, but it doesn’t mean that each individual day is easy. It is not. But I do it because I am used to it.

After a year

I’ve been quite consistent with exercising and diet over the last year. Well, over the last year and a half in most regards. Anyway, this has been my first experience with doing exercise regularly and, in particular, trying to build up muscle. This has been a process with a number of surprises, or comments.

- Honestly, I haven’t perceive a massive amount of change in how do I feel in general. Previously I wasn’t feeling bad in any obvious aspect, and I was active walking a lot, which I think allowed me to not feel tired all the time. A few areas have improved, though. I can definitively perceive an improvement is carrying groceries and other bags, which is quite noticeable. It also helped with RSI problems on my hands. Some times I had before some soreness in my knees that seemed to go away, but it was rare to start with.

- Measurements, on the other hand, have improved quite noticeable. Things like blood pressure, blood sugar, and other objective things are much much better. These things are difficult to perceive subjectively, but are big improvements in overall health.

- Physically there’s obviously a big change. Not only in less weight, but this is the first time in my life that I can see some muscle in the upper body. I’ve always been naturally muscular in my legs, probably combined with the fact that I’ve always liked to walk, but my arms and shoulders feel strange having some sort of definition.

- On that regard, I’ve been surprised on location and function of some muscles. It’s weird that you don’t really know how your body actually IS. For example, the location of the biceps is a bit different that I thought, as it is sightly inclined in your arm, towards your chest, instead of being perfectly pointing forward. That surprised me, for some reason.

- The one muscle that surprised me the most is the triceps. This is the muscle on the back part of your upper arm, which works to extend it. It works in opposition to the biceps. This muscle sort of moves around the arm, when the arm is extended, it is more prominent on the upper part of the arm, closer to the shoulder. This is actually when it’s performing its function. But when the arm is contracted, it is more prominent closer to the elbow. It works in a very different way than the biceps, which is more in a contracted/relaxed way, as you’ll expect.

- Even feeling different muscles that you are used to. Certain exercises will force you to use muscles that you have but are not aware. Also exercising in certain way will make you way more aware on where specifically the muscle is and when you are using it, sometimes referred as mind-muscle connection. And yes, sometimes the next day there are parts of body you didn’t even know that hurt.

- Another weird thing of your body is that it’s not entirely symmetric. When you develop muscle, it won’t appear in both sides of your body at the same time. It’s small, assuming a symmetric training, but you can notice it, which is quite strange.

- Working out regularly and hard makes you appreciate the insane amount of effort involved on it. And pure invested time. You try to reach your limit constantly and it never becomes easier. Perhaps the habit makes it more manageable, as you know what to expect, and after a while, the recovery is faster. But each and every minute costs. I could use some good-old 80s montage to speed up the process.

- My least favourite exercise are push ups. They are awful. I was really really bad doing them, so I started doing them more often. Adding extra push ups here and there to actively improve the execution. I still hate them, but I notice a great improvement in doing them. There’s a lesson there, but I still don’t like doing push ups.

- Resting is a very important part of the process that should not be overlooked. Given that I take daily measurements, I can see that a day where you don’t rest as you should (this happened for example for some work-related problems where I needed to stay up at night) it reflects quite clearly on your weight. It really helped me to try to get my 8 hours of sleep every day, as well as respect rest days.

- The moment you search some information online you start seeing an insane amount the videos recommended. It’s clear that the world of fitness is really big, and full of all kind of things, from tips to products. One can get in a rabbit hole of YouTubers talking about supplements, proper form or all kinds of tips. It’s also easy to see that there’s a lot of shady advice out there.

- This happens in combination to another interesting thing. Clearly you can start feeling body dysmorphia, meaning that you are way more aware of your body and the parts that you don’t like about it. As you start being bombarded by videos and images of people with much better looking bodies than yours, it’s unavoidable to make comparisons. I’ve never been much for being worry about how my body is, but I can feel now to be more aware of that. You can clearly see how this can get out of control.

- This happens even if my objective is not really to be “jacked” or “muscular” or anything like that. But it’s somehow unavoidable to notice things that I wasn’t noticing before.

It’s still a process. I am still a newbie in all of this, and I still feel like I have a lot to learn and a lot to go. I’m still surprised that I am following this, and we’ll see how it goes after year two…

The Many Challenges of a (Software) Architect

Software Architecture is a fascinating subject. The objective of a solid architecture for a system is to generate an underlying structure that is separates components in a meaningful way, but at the same time, is flexible to allow the system to grow in capacities and functionalities. That it’s performant, reliable and scalable within the required parameters, but it’s as easy to work with as possible.

Even worse than that, the work is to do that at the same time that a myriad of other competing priorities are being done, new functionalities are added and costs kept under control.

And, of course, while being as simple and elegant as possible.

In essence, nothing new to anyone doing software development…

After all, any program, even very small ones, have certain architecture in their design. Even when tiny, any structure in place can be rightfully understood as the architecture of the system.

The main difference when we talk about Software Architecture, is that usually we refer to systems that have already certain size, so multiple people are working on them. The view changes from “how much can fit in the head of one person” to “how the work can be divided so it can be done effectively”. A single person can have a strange way of doing things, but it makes sense for them.

And that’s the crucial element, the involvement of different people, and more commonly, different teams in the process. No longer esoteric divisions that make sense only for their creator are a good idea.

I’ve been involved into a lot of architecture discussions and processes in the last 3 to 4 years. First, as part of a group that was trying to give technical direction across the company where I was, and in my current role as Architect, where is a big part of my daily duties.

And it’s almost all about the communication. For example:

- A very difficult task is to transmit the vision in your head or design to different people that may have other priorities and perhaps they don’t see things in the same way. Most of the time is because they don’t perceive the improvement in the same way, as they are focused in other tasks.

This is similar to trying to teach someone to use a shortcut to copy/paste instead of going to the menu and select it. Sure, it’s faster, but they don’t see the point because they are focused in writing and not in learning how to be faster. A lot of architectural decisions are about making changes for improvements that may not be immediately obvious, like making processes more resilient. - It’s very common to be between two different teams trying to make them cooperate. But this is easier said than done, because each team have their own agenda.

Another important detail is that there’s no hierarchical relation, so at best you can only influence the teams and that’s a difficult skill. - It’s great to have something designed, point a finger in the air and say: “Make It So”, but you need to do follow up on what’s the progress on the different tasks and ensure that the actual implementation of it makes sense and achieves the objectives.

- There’s also the need to be self-reflective and acknowledge that every step needs to be evaluated critically to be sure that it’s in the right direction.

The objective of work in the Architecture of the system is not to mandate top-down a Perfect Design™, no matter what. Instead, is to help the different teams into work better. There needs to be feedback in the process.

Surprise, it’s all dealing with people, it’s all soft-skills! Influence, teaching, negotiation, communication… While the tech skills and experience are obviously a prerequisite, in the day-to-day the muscles that gets more flexed are the the soft-skills ones.

A consequence of this is that pressure and struggle manifest in a different way, as you are not only depending on something that a machine can do, as typical for engineers, but on what other people can do.

It’s a fascinating work, though. I like it a lot as, when everything comes together is great to feel that you are helping a lot of teams to work better together and to make your vision on what direction the system should follow gets implemented.

And you also get to draw a lot of diagrams! Diagrams everywhere!

Futures and easy parallelisation

Here is one small snippet that it’s very useful when dealing with quick scripts that perform slow tasks and can benefit from running in parallel.

Enter the Futures

Futures are a convenient abstraction in Python for running tasks in the background.

There are also asyncio Futures that can be used with asyncio loops, which work in a similar way, but require an asyncio loop. The Futures we are talking in this post work over regular multitask: threads or processes.

The operation is simple, you create an Executor object and submit a task, defined as a callable (a function) and its arguments. This immediately returns you a Future object, which can be used to check whether the task is in progress or finished, and if it’s finished, the result (the returned value of the function) can be retrieved.

Executors could be based in Threads or Processes.

The snippet

Ok, that’s all great, but let’s go to see the code, which is quite easy. Typically, in your code you will have a list of tasks that you want to execute in parallel. Probably you tried sequentially first, but it takes a while. Running them in parallel is easy following this pattern.

from concurrent.futures import ThreadPoolExecutor

NUM_WORKERS = 5

executor = ThreadPoolExecutor(NUM_WORKERS)

def function(x):

return x ** 2

arguments = [[2], [3], [4], [5], [6]]

futures_array = [executor.submit(function, *arg) for arg in arguments]

result = [future.result() for future in futures_array]

print(result)The initial block creates a ThreadPoolExecutor with 5 workers. The number can be tweaked depending on the system. For slow I/O operations like calling external URLs, this number could easily be 10 or 20.

Then, we wrap our task into a function that returns the desired result. In this case, it receives a number and returns it to the power of two.

We prepare the arguments, like in this case the numbers to calculate. Note that each element needs to be a tuple or list, as it will be passed to the submit method of the executor with *arg.

Finally, the juicy bit. We create a futures_array submitting all the information to the executor. This returns immediately with all the future objects.

Next, we call the .result() method on each future, retrieving the result. Note that the result method is blocking, so it won’t continue until all tasks are done.

Et voilà! The results are run in parallel in 5 workers!

A more realistic scenario

Sure, it will be strange to calculate squared numbers in Python in that way. But here is a sightly more common scenario for this kind of parallel operation: Retrieve web addresses.

from concurrent.futures import ThreadPoolExecutor

import requests

from urllib.parse import urljoin

NUM_WORKERS = 2

executor = ThreadPoolExecutor(NUM_WORKERS)

def retrieve(root_url, path):

url = urljoin(root_url, path)

print(f'{time.time()} Retrieving {url}')

result = requests.get(url)

return result

arguments = [('https://google.com/', 'search'),

('https://www.facebook.com/', 'login'),

('https://nyt.com/', 'international')]

futures_array = [executor.submit(retrieve, *arg) for arg in arguments]

result = [future.result() for future in futures_array]

print(result)In this case, our function retrieves a URL based on a root and a path. As you can see, the arguments are, in each case, a tuple with the two values.

The retrieve function joins the root and the path and gets the URL (we use the requests module). The print statement work as logs to see the progress of the tasks.

If you execute the code, you’ll notice that the first two tasks start almost at the same time, while the third takes a little more of time and start around 275ms later. That’s because we created two workers, and while they are busy, the third task needs to wait. All this is handled automatically.

1645112889.005687 Retrieving https://google.com/search

1645112889.006123 Retrieving https://www.facebook.com/login

1645112889.2814898 Retrieving https://nyt.com/international

[<Response [200]>, <Response [200]>, <Response [200]>]So that’s it! This is a pattern that I use from time to time in small scripts that very handy and gives great results, without having to complicate the code to deal with cumbersome thread classes or similar.

One more thing

You may structure the submit calls with the specific number of parameters, if you prefer

arguments = [('https://google.com/', 'search'),

('https://www.facebook.com/', 'login'),

('https://nyt.com/', 'international')]

futures_array = [executor.submit(retrieve, root, path) for root, path in arguments]

result = [future.result() for future in futures_array]The *arg part allows to use a variable number of arguments using defaults depending on the specific call, and allow for fast copy/paste.

“Python Architecture Patterns” now available!

My new book is available!

Current software systems can be extremely big and complex, and Software Architecture deals with the design and tweaking of fundamental structures that shape the big picture of those systems.

The book talks about in greater detail about what Software Architecture is, what are its challenges and describes different architecture patterns that can be used when dealing with complex systems. All to create an “Architectural View” and grow the knowledge on how to deal with big software systems.

All the examples in the book are written in Python, but most concepts are language agnostic.

This joins the collection of my books! I’m quite proud to have been able to write four books already…

Python Automation Cookbook for only $5!

There’s currently a Christmas offer in Packt website when you can get all ebooks for just $5 or 5€.

It’s a great opportunity to get the second edition of the Python Automation Cookbook and improve your Python skills for this new year!

Also available, of course, is Hands-On for Microservices with Python, for people interesting in learning about Docker, Kubernetes, and how to migrate Monolithic services into Microservices structure.

A great opportunity to increase your tech library!