Still working from home after all those years

We are all experts in working from home now, right?

Since March 2020 we’ve been stuck in this strange situation where time has stopped and we are working regularly from home, at least almost everyone in the software industry. Because we were already a bit ahead of the curve.

I was seeing more and more remote work since at least a few years before. The first time that I had any meaningful remote work was around 2005. Back then, I was working as a consultant and I had regular meetings with customers onsite, carrying a laptop. Somedays I would finish the reports and other work from home, as it didn’t make sense to get back to the office. It was a small company, but most people will have similar experiences, some days not going to the office.

It took a while for me to encounter back in a situation where remote work was common, but in the last half of the 2010 it was more and more common. I knew some people that moved countries and kept their jobs, so they work mostly remotely. And on-call work routinely require people being able to connect from their homes, setting up a VPN, etc.

That was growing over time. Three years ago, it was common where I worked to work from home one or two days of the week, and I’ve been taking advantage of going for a month overseas to Spain and keep working remotely most days.

But now it has taken a sudden acceleration, and we experienced quite a lot of work from home. I’m going just to put in writing some of the elements I think are quite important in this, or at least they are for me.

Space.

While for working sparingly one day or two you can sit on the kitchen table or from the coach, this is hardly a sustainable option. You need a dedicated space that can be used for working effectively day after day.

“Python Architecture Patterns” book announced!

We are getting close to the end of the year, but I have great news! A new Python book is on the way, and will be released soon.

Current software systems can be extremely big and complex, and Software Architecture deals with the design and tweaking of fundamental structures that shape the big picture of those systems.

The book talks about in greater detail about what Software Architecture is, what are its challenges and describes different architecture patterns that can be used when dealing with complex systems. All to create an “Architectural View” and grow the knowledge on how to deal with big software systems.

All the examples in the book are written in Python, but most concepts are language agnostic.

This new book is related to my previous one “Hands-On Docker for Microservices with Python“, as it also deals with certain architecture elements, though in the new book it has a broader approach.

With this book, I will have four published technical books, well, three if you count the two editions of the Python Automation Cookbook. I count them as two as the writing effort was there!

I hope that it will be fully available soon, so far it’s only in pre-sale. Stay tuned!

Basic Python for Data Processing Workshop

I’ll be running a workshop at the European ODSC this 8th of June.

The objective of the session is to provide some basic understanding of Python as a language to be used for data processing. Python syntax is very readable and easy to work with, and its rich ecosystem of libraries makes it one of the most popular programming languages in the World.

We will see some common tools and characteristics of Python that are basic to analyze data, like how to import data from files and to generate results in multiple formats. We will also see some ways to speed up the processing of data.

This workshop is aimed at people with little to no knowledge of Python, though some programming knowledge is required, even if it’s in a different language.

Session Outline

Lesson 1: Basic Python.

Introduction to basic operations with Python that is basic for treating data and creating powerful scripts. Also, we will learn how to create a virtual environment to install third-party libraries and discuss how to search and find powerful libraries. It will also include creating flexible command-line interfaces.

Lesson 2: Dealing with files

Read and write multiple files, from reading CSVs to ingest data to writing HTML reports including graphs with Matplotlib. We will also cover how to create Word files and PDFs.

Lesson 3: Efficient data treatment

Learn how to deal with data in an efficient manner in Python, from using the right data types, to use specialized libraries like Pandas, and using tools to run Python code faster.

Background Knowledge

Programming knowledge, even if it’s intro in Python

Got interviewed as PyDev of the Week!

I got interviewed as part of Mike Driscoll’s PyDev of the week series. You can check the interview here

Podcast Wrong Side of Life

I started a new podcast with Sana Khan (@LegalSana_Khan and http://sanakhanwrites.com/), talking about things that interest us, like society stuff, tech, laws, movies… We recorded already a few episodes, so perhaps you want to take a look.

You can listen to it in http://wrongsideoflife.com

It’s also available in Apple Podcasts, Spotify and you can search it directly in common podcast applications like Overcast or Castro.

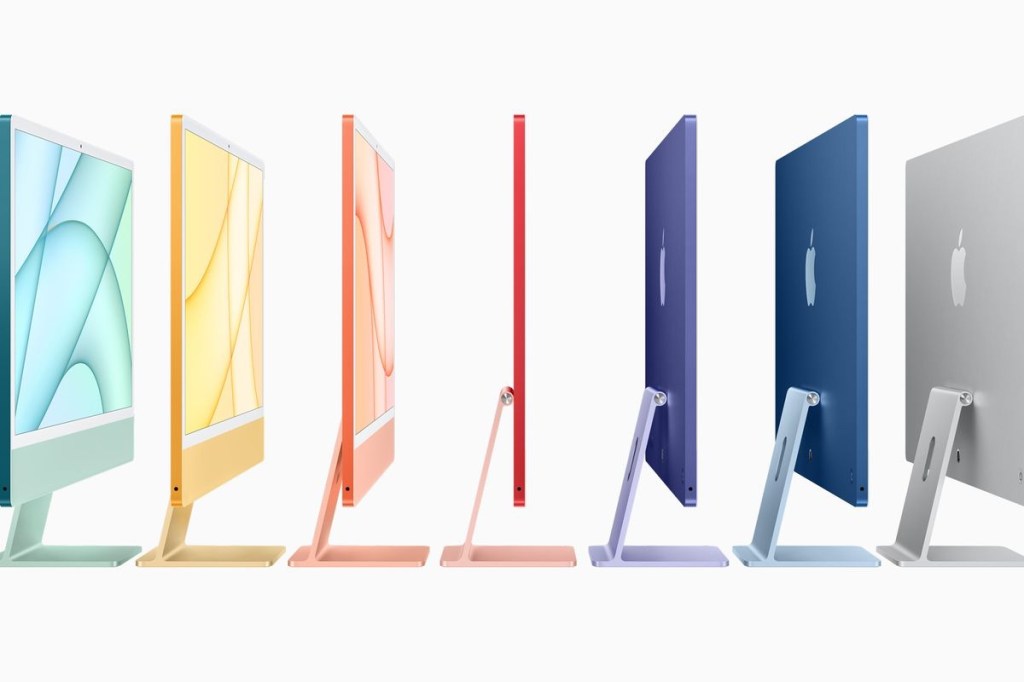

Colourful iMacs again

I really love the new iMacs. The design looks amazing and it reintroduced something long missing in Apple computers. Vibrant colours

I’ll be waiting to the “big” iMac (the current-27 inches size) which I suspect will be renamed as iMac Pro or iMac Plus to get clarity in the product line.

The rest of introduced details are what you’ll expect on this iteration:

Apple Silicon, still M1 chip. Probably a new chip will be presented in Autumn, likely after the introduction of the new iPhone. The Apple Silicon appears to have two models, one for all the “consumer” products, with the introduced M1 products so far (Mac Mini, iPad Pro, MacBook Air and iMac) and a more powerful, “pro”, option (bigger iMac, MacBook Pro) Mac Pro is a special case that probably will get either multiple “pro” chips or a special, “top” chip.

The possibility of using Touch ID in the wireless keyboard was unavoidable, as it’s a really convenient feature on laptops. Same for the webcam, which was due for upgrade. Next step will be to incorporate Face ID as well.

The new design also points to what to expect in next years. Thinner, lighter design which will remove the chin at some point. Same for bezels, which will become smaller. The addition of an external power adapter is strange, though it moved the Ethernet plug out of the back, and I imagine makes the screen lighter.

I hope that it has the same or similar colours than the introduced ones. because they look amazing… And the matching mice, trackpads and keyboards are lovely

macOS, Apple’s core

These days mark the 20th anniversary of Mac OS X, later renamed as macOS.

Really the underlaying tech is the same, but the naming allows them to move past 10.X into other unexplored territories.

There’s a lot of talk these days about Macs, specially after the introduction of Apple Silicon, which certainly is an exciting move. There was some years where there was debate over the tech world on whether Apple should drop the Macs and focus on iPads or iPhones. Because the old Personal Computer paradigm is dead, right?

Nonsense.

While the famous analogy by Steve Jobs about cars and trucks is valid (just because there’s more cars that doesn’t mean that the trucks are getting nowhere, as they are more suited to certain tasks), I think it doesn’t capture really the essence of why smartphones and tablets are not really replacements for Personal Computers (being that desktops or laptops)

It’s not only that the virtual entirety of software that runs in iPhones and iPads is built in Macs. It’s that their builders uses Macs for their main workflows.

Sure, there’s some people that can run their work in iPads or even 90% of their flow in smartphones. But the form factor makes much more productive to work in a full personal computer. With a good keyboard, with key shortcuts, with more space in the screen. The fact that the iPad uses now an external keyboard is prove of that.

Obviously, macOS is tailored for all those elements, in the same way that the iPhone is for being handled with a hand, with less precision input. Writing a long email becomes more complicated, copy/paste is sensibly more frustrating. The Mac makes all those actions easier.

And that’s why the Mac is the lynchpin of everything at Apple. Because it’s at the source of all other products. It’s where the iPhone is designed and where the iPad and Apple TV apps are written.

macOS is Apple’s core.

PyLadies Architecture Talk

I gave last November an online talk (at 2020 demands) in the PyLadies Dublin about the general software architecture that we are using in one of the projects that I’m working on, a proctoring tool for online exams. It’s on the minute 26 go the video, the other talks are also quite interesting.

(the talk is directly accessible by the URL https://www.youtube.com/watch?v=UIY-Z7daEG0&t=26m10s )

Hope you like it!

The price of a bad connection when working from home

I wrote a guest post in the B2beematch blog, about some of the challenges in working remotely regarding connectivity.

The COVID-19 pandemics have greatly impacted our life’s, and people lucky enough to be able to work from home face a big challenge in remaining productive in dire times. I tried to go on some of the elements that impact in the connectivity and network capacities to remain online, which is crucial on remote working.

You can check the post here.

2nd Edition for Python Automation Cookbook now available!

Good news everyone! There’s a new edition of the Python Automation Cookbook! A great way of improving your Python skills for practical tasks!

As the first edition, it’s aimed to people that already know a bit of Python (not necessarily developers). It describes how to automate common tasks. Things like work with different kind of documents, generating graphs, sending emails, text messages… You can check the whole table of contents for more details.

It’s written in the cookbook format, so it’s a collection of recipes to read and reference independently. There are also chapters combining the individual recipes to create more complex tasks. I tried to make it accessible and practical.

This new edition has the whole content reviewed. It also includes three new chapters. They cover cleaning up data, adding tests to the code, and how to start using Machine Learning. The ML chapter uses existing online APIs. This is a great way to great results without having to learn complex math.

The book is available for purchase in the Packt website as well as Amazon.

Even if it’s a second edition, it’s impressive how much work goes into writing a book. This book joins the first editon and the Hands-On Docker for Microservices with Python. This means that I have three published books, which is supper exciting.